Integers on a computer are simple and behave in predictable ways. Floating point numbers are not and don’t always respectively – I’ll explain what they are and why below. Working with floating point numbers is also slightly like cleaning up after my dogs, which I will also explain below.

Integers

Integers are a bit like the classical mechanics part of physics – they are straightforward and well-behaved. Most of what you do every day (apart from dividing) will work with integers. If you know the minimum value an integer can hold (e.g. -2,147,483,648), and the maximum value it can hold (e.g. 2,147,483,647), you can be confident that it will also hold every value in between, reliably and unambiguously. You will be able to compare two integers and be confident in the results of the comparison – are these two integers the same or not?

You will be able to do some very useful things with just integers:

- Addition, subtraction, multiplication of integers;

- Count or summing a set of integers;

- Calculate the min, max or mode of a set of integers;

- Label things uniquely, e.g. give a (unique) primary key value to every row in a database table (but watch out for security vulnerabilities with that approach).

The important thing is that the operations above take a set of integers and produce another integer as a result i.e. you don’t leave the world of integers.

Implementing integers

Depending on your environment, there will commonly be 16 or 32 bits of memory to hold an integer. How these bits are interpreted depends on whether you want to store a signed number (positive or negative) or an unsigned number (0 or greater).

If you want a signed number, one of the bits will store the sign and the remaining bits will store the number’s size. Because this is binary, the loss of one bit means the max size of the number will be half what it would be for an unsigned number stored in the same number of bits. (Each bit doubles the size of the biggest number you can store.)

So, a signed number with 32 bits will be up to 2,147,483,647 and with 16 bits it will be up to 32,767.

An unsigned number with 32 bits will be up to 4,294,967,295 and with 16 bits it will be up to 65,535.

The need for floating point numbers

The list of operations above that you can do with just integers is good but has a few holes. You can’t store or multiply by fractional things, e.g. 3.5. This means that interest rates, tax rates, discounts and commissions are probably impossible.

There is no division, and hence no mean, median or percentages either. If you only ever divide integers by one of their factors, e.g. 30 by 2, 3, 5,6, 10 or 15 then integers will be fine for the result too. However, if you want to divide 30 by 4 you need some way to store something that isn’t an integer – either some way to encode the operation, e.g. the pair of integers (30,4) or (15,2), or its fractional result 7.5.

Storing a pair of integers that are the elements of a division is fine for some numbers (the rational numbers) but not others (the irrational numbers) which by definition can’t be defined as the result of dividing two numbers. Some irrational numbers are useful like pi, e or the square root of 2.

If we want to store both rational and irrational numbers, what do we do? We could have a string of digits and then say where to put the decimal point. This is what happens in e.g. C#’s decimal type. This is a relatively large and uncommon data type.

The more common way to store floating point numbers is based on scientific notation. Scientific notation is a * 10b. a is called the mantissa, and b is the exponent. a is always a decimal greater than -10 and less than 10. b is an integer that can be positive or negative.

You can think of the mantissa as being the core part of the number, and then the exponent says where to shift it (left or right) along the number line, by repeatedly multiplying it or dividing it by 10.

So large numbers, like Avogadro’s constant, will look like this 6.02214076×1023. You have taken 6.02214076 and shifted it to the left along the number line by 23 places, leaving the decimal point behind (to the right) as the number gets repeatedly multiplied by 10. Small numbers, like Planck’s constant, will look like this 6.62607004 × 10-34. You have taken 6.62607004 and shifted it to the right along the number line by 34 places, leaving the decimal point behind (to the left) as the number gets repeatedly divided by 10.

In practice, the storage is a bit more complicated than I’m presenting – for instance it is based on base 2 rather than base 10. However, the same basic ideas and considerations apply. As people will probably be more familiar with scientific notation in base 10 than in base 2, I’m sticking with base 10 for this article.

The theory behind the problem with floating point numbers

If integers are like classical mechanics in physics, then floating point numbers are like quantum physics or relativity. A lot of everyday experience has led you to assume that certain things were always true, but now they’re not. This can cause you (and your code) to trip up, when a violated assumption suddenly jumps out of the woodwork. However, floating point allows you to do things that you just can’t do with integers, so if you’re careful to manage their limitations they can be very useful.

We are trying to represent real numbers. There are infinitely many real numbers between any two real numbers – even between e.g. 0 and 1. You can always add an extra decimal place, and that multiplies the quantity of numbers by 10. However, we have only finite space to store a given number, and the clash between an infinitely big set of things and finite storage for them is the basic problem.

Here is a silly and exaggerated example to illustrate the point. We will simplify and reduce things down like this:

- Positive numbers only, so no sign bit

- 2 bits for the mantissa

- 3 bits for the exponent

0 sign bits + 2 mantissa bits + 3 exponent bits = 5 bits total. Normally, floating point numbers would be stored using 32 or 64 bits, so the problems I’m about to show happen less often but are still there.

2 bits for the mantissa means we can store only 0, 1, 2 and 3. We will start off with the exponent holding 0. 100 = 1, so we will have 0…3 x 1, i.e. 0, 1, 2, 3.

After we have got to 3 we have run out of bits in the mantissa and so can’t use it to represent numbers any bigger. To get bigger we will need to increase the exponent from 0 to 1. This opens up all the mantissa values again. 101 = 10, so we will have 0…3 x 10, i.e. 0, 10, 20, 30.

We have run out of mantissa values again, so to go bigger we will have to increase the exponent from 1 to 2 (don’t worry, we won’t keep doing this forever). 102 = 100, so we will have 0…3 x 100, i.e. 0, 100, 200, 300.

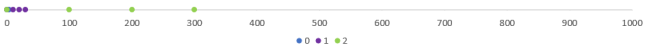

You can see these values in these diagrams:

As I said, this is an extreme example. The mantissa is so small that it won’t even reach up to the smallest value stored by the next exponent value. For instance, we stop at 3 rather than reaching to 9, and stop again at 30 rather than reaching 90. However, it shows the basic mechanism and its limitations:

- Not all numbers can be represented (this is to be expected, given the mismatch between infinitely many numbers to store in finite space)

- The numbers that can be represented are not uniformly spread out along the number line – they cluster around 0.

A concrete example of the problem with floating point numbers

Here is some C# that shows a problem with floating point numbers in practice. It shows two examples where we do things with pairs of floats. In the first example, we just assign one float from the other in the pair – these look the same when we print them and are the same when we compare them.

In the second example, we start with the same pair of small numbers. We then do some maths on one of the floats that we expect will produce no net effect – multiplying by a constant repeatedly, and then dividing by the same constant the same number of times. Just to make sure the number isn’t overflowing when it’s at its biggest, we will print it out too. It’s 7.222 * 1022, which is within the max value of a float (3.4 * 1038).

The output is below – the first example is well-behaved, but the second example isn’t.

direct assignment 0.00000000000000030000000000000000000 0.00000000000000030000000000000000000 they are the same different path to same answer at its biggest, p is 72220270000000000000000.00000000 0.00000000000000029999990000000000000 0.00000000000000030000000000000000000 they are different

Here is the code:

class Program

{

static void Main(string[] args)

{

DirectAssignment();

DifferentPathToSameAnswer();

}

static void DirectAssignment()

{

float a, b;

a = 0.0000000000000003F;

b = a;

Console.WriteLine("\ndirect assignment");

TestPair(a, b);

}

static void DifferentPathToSameAnswer()

{

int totalIterations = 100;

float p, q, r;

p = 0.0000000000000003F;

q = p;

r = 2.42F;

for(int iteration = 0; iteration < totalIterations; iteration++)

{

p = p * r;

}

Console.WriteLine("\ndifferent path to same answer");

Console.WriteLine("at its biggest, p is " + p.ToString("0.00000000"));

for (int iteration = 0; iteration < totalIterations; iteration++)

{

p = p / r;

}

TestPair(p, q);

}

static void TestPair(float x, float y)

{

const string floatFormat = "0.00000000000000000000000000000000000";

Console.WriteLine(x.ToString(floatFormat));

Console.WriteLine(y.ToString(floatFormat));

if (x == y)

{

Console.WriteLine("they are the same");

}

else

{

Console.WriteLine("they are different");

}

}

}

What does working with floating point numbers have to do with cleaning up after dogs?

If you’re adding up a set of numbers, you should add them in the order smallest first to biggest last. In my experience, it’s like cleaning up the various lumps of poo my dogs produce: pick up the smallest bit first and largest bit last. (This assumes that all the numbers are the same sign, and largest means furthest from zero.)

Imagine you want to add all the numbers 1…1,000,000. If you start at 1,000,000, after the first 4 values the subtotal will be 3,999,994, and when you are 4 numbers from the end the subtotal will be 499,999,499,989. You will then add the numbers 4, 3, 2, 1. The exponent will be set to accommodate the large subtotal, i.e. 11. You will then be adding relatively tiny things to this large subtotal, which relies on the mantissa having enough bits to stretch to the tiny new numbers. If there aren’t enough bits in the mantissa, the extra numbers will make no difference to the subtotal i.e. they will effectively not be included in the subtotal.

If you start at 1, after the first 4 values the subtotal will be 10, and when you are 4 numbers from the end the subtotal will be 499,995,499,995. You will then add the numbers 999,997 … 1,000,000. The new numbers are much closer to the subtotal in terms of size, and so they are much more likely to be accommodated in the mantissa, which will give you an accurate result.

Conclusion

Integers can be very useful but have limitations to what they can do. Floating point numbers largely lift those limitations but introduce limitations of their own. Being aware of the limitations of both kinds of number is crucial if you want to minimise the number of bugs in your code.

One of the syntactical advances made with Ada language is that syntax was provided to understand and control how binary floating point would work on a platform without needing to understand the hardware in detail (and code round it), Ada keywords available to control the model numbers, epsilon and interval. But I don’t think this was picked up in Java, C# or other more recent languages. Or indeed Excel.

I did work with one implementation of Pascal many years ago where the calculations and number storage was actually decimal (and therefore quite slow, comparatively, with many binary machine instructions needed to perform simple calculations stored as 2 BCD digits per byte). Of course you can still get irrational numbers in a decimal calculation, but most people learn that in secondary school mathematics and more readily understand and can work around that.

LikeLike